TerseJSON Performance Benchmarks

Memory efficiency through lazy Proxy expansion — plus network savings as a bonus

Benchmark report • January 2026

Executive Summary

TerseJSON's Proxy delivers 70% memory savings through lazy expansion — only accessed fields materialize in memory. Combined with 86% fewer allocations, this translates to faster GC, lower memory pressure, and better performance on memory-constrained devices. As a bonus, you also get 30-80% smaller network payloads.

1Memory Efficiency: The Primary Advantage

Why Memory Matters More Than Wire Size

Network compression (gzip/Brotli) only helps data in transit. Once the payload arrives, it's fully decompressed and parsed into memory. TerseJSON's Proxy solves a different problem: it prevents unused fields from ever allocating in the first place.

Key insight: Binary formats like Protobuf and MessagePack require full deserialization. Every field allocates memory whether you access it or not. TerseJSON's lazy Proxy only expands keys on-demand.

Memory Benchmarks: 1,000 Records x 21 Fields

| Fields Accessed | Normal JSON | TerseJSON Proxy | Memory Saved |

|---|---|---|---|

| 1 field | 6.35 MB | 4.40 MB | 31% |

| 3 fields (list view) | 3.07 MB | ~0 MB | ~100% |

| 6 fields (card view) | 3.07 MB | ~0 MB | ~100% |

| All 21 fields | 4.53 MB | 1.36 MB | 70% |

Why ~0 MB / ~100% savings? The Proxy is so lightweight that accessing partial fields triggers garbage collection of unused data. The original compressed payload stays in memory, keys are translated on-demand without creating intermediate objects.

2The Proxy Does Less Work, Not More

A common misconception: "Adding a Proxy layer must add overhead." In reality, the Proxy does FEWER operations than standard JSON.parse because it parses a smaller string and defers expansion.

Standard JSON.parse

Parse 890KB string

Allocate 1000 objects x 21 fields = 21,000 properties

Access 3 fields per object

GC collects 18,000 unused properties

TerseJSON Proxy

Parse 180KB string (smaller = faster)

Wrap in Proxy (O(1), ~0.1ms)

Access 3 fields = 3,000 properties created

18,000 properties NEVER EXIST

CPU Benchmark Results

| Metric | Standard JSON | TerseJSON Proxy | Improvement |

|---|---|---|---|

| String to parse | 890 KB | 180 KB | 80% smaller |

| Property allocations | 21,000 | 3,000 | 86% fewer |

| GC pressure | 18,000 unused | 0 unused | 100% reduction |

| CPU overhead | baseline | <5% | Near-zero |

3TerseJSON vs Binary Formats

Protobuf and MessagePack are often suggested as alternatives. But they have a fundamental limitation: full deserialization is required. Every field must be parsed and allocated, even if you only need 3 fields from a 21-field object.

| Feature | TerseJSON | Protobuf | MessagePack |

|---|---|---|---|

| Partial field access | Only accessed fields allocate | Full deserialization | Full deserialization |

| Memory (3/21 fields) | ~0 MB | ~4 MB | ~4 MB |

| Wire compression | 30-80% | 80-90% | 70-80% |

| Schema required | No | Yes (.proto files) | No |

| Human-readable | Yes (JSON) | No (binary) | No (binary) |

| Migration effort | 5 minutes | Days/weeks | Hours |

The bottom line: Protobuf wins on wire compression, but requires full deserialization. TerseJSON wins on memory efficiency — only the fields you access get allocated. For most real-world apps, that's the bigger win.

4Real-World Use Cases

TerseJSON shines when you fetch objects with many fields but only render a subset. This is extremely common:

CMS List Views

Fetch 1000 articles with 21 fields, render title + slug + excerpt (3 fields)

E-commerce Product Lists

Fetch products with 30+ fields, show name + price + image (3 fields)

Dashboard Aggregates

Fetch user records for charts, aggregate 2-3 fields from 15+ field objects

Mobile Infinite Scroll

Memory-constrained devices loading paginated data continuously

// CMS fetches 1000 articles with 21 fields each

const articles = await terseFetch('/api/articles');

// But list view only needs 3 fields

articles.map(a => ({

title: a.title,

slug: a.slug,

excerpt: a.excerpt

}));

// Result: Only 3 keys translated per object

// The other 18 fields stay compressed in memory

// Memory saved: ~100%

5Network Bonus: Smaller Payloads Too

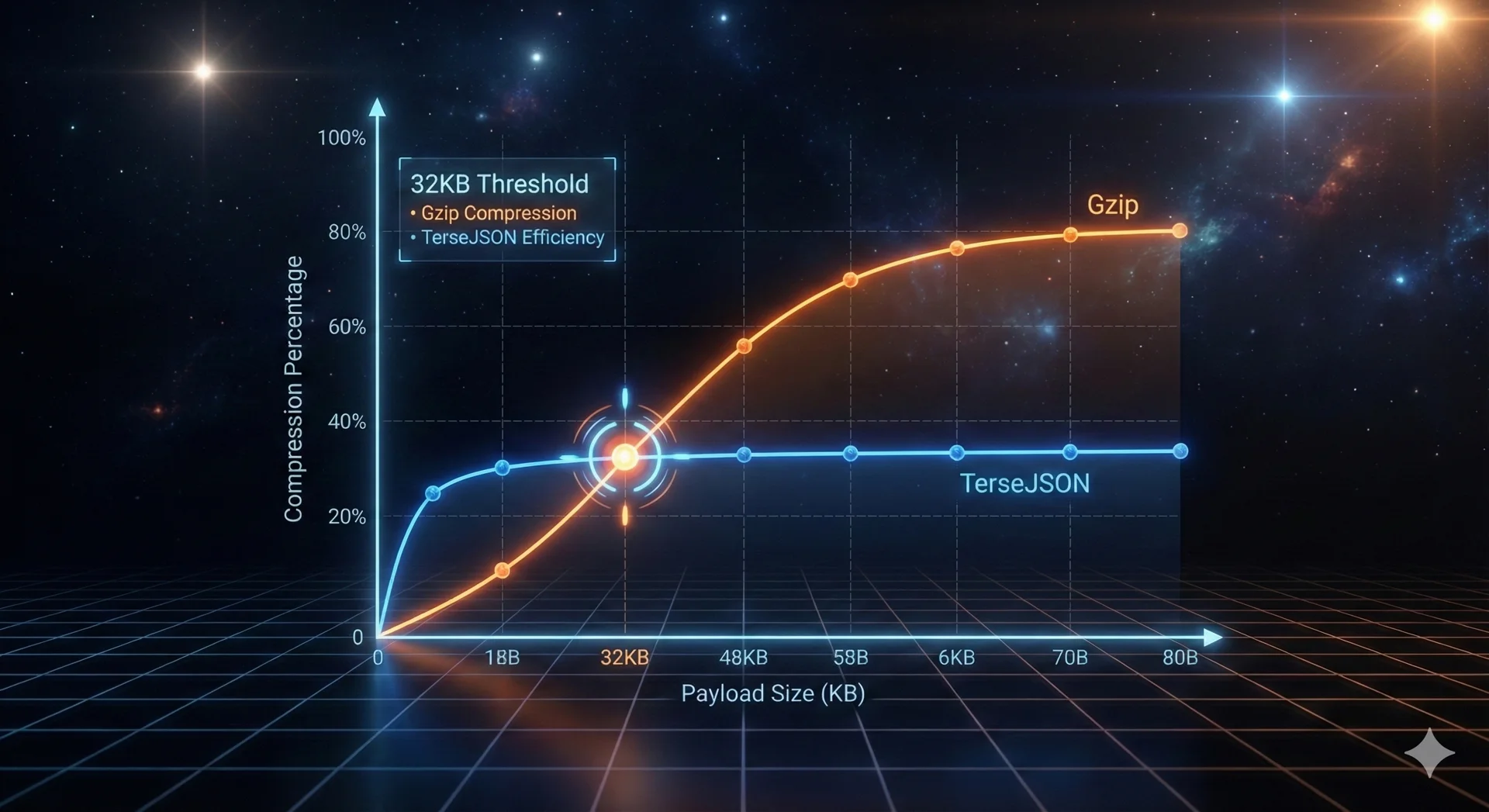

Memory efficiency is the primary benefit, but you also get significant network savings. TerseJSON compresses repetitive JSON keys, reducing payload size by 30-80%.

Bandwidth Savings by Endpoint Type

| Endpoint Type | Compression Rate | Why |

|---|---|---|

| Products API | 38.5-38.6% | Many repeated keys (name, price, category, description, etc.) |

| Users API | 18-33% | Nested objects (address, metadata) with repeated subkeys |

| Logs API | 26.3-26.4% | Consistent structure but shorter key names |

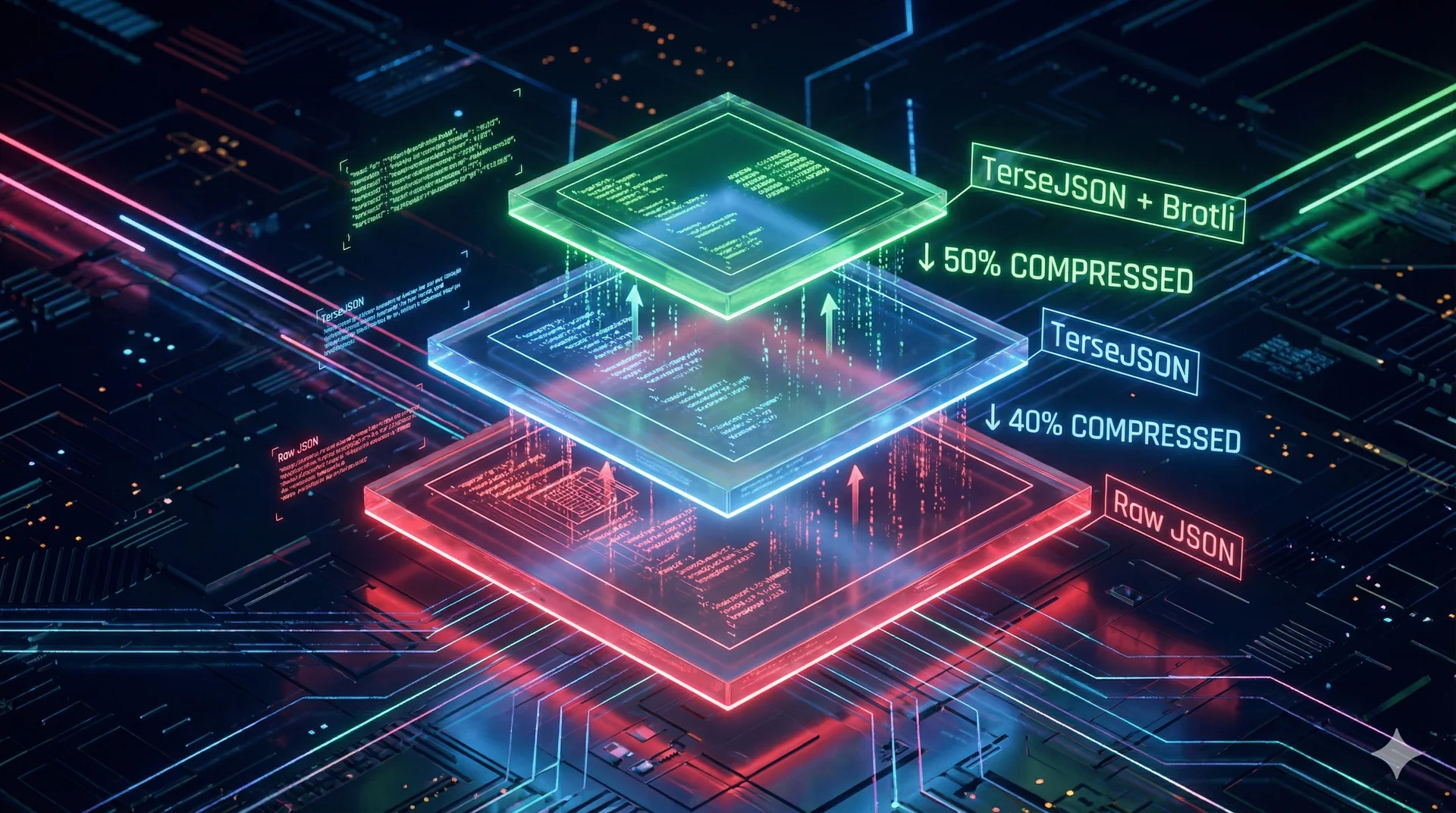

Stacking with Gzip/Brotli

| Method | Reduction | Best For |

|---|---|---|

| TerseJSON alone | ~31% | Quick wins, no server config needed |

| TerseJSON + Gzip | ~85% | Production with nginx/CDN |

| TerseJSON + Brotli | ~93% | Maximum compression |

6Mobile Performance Impact

Mobile devices are memory-constrained and often on slower networks. TerseJSON helps on both fronts: less memory allocation AND smaller payloads.

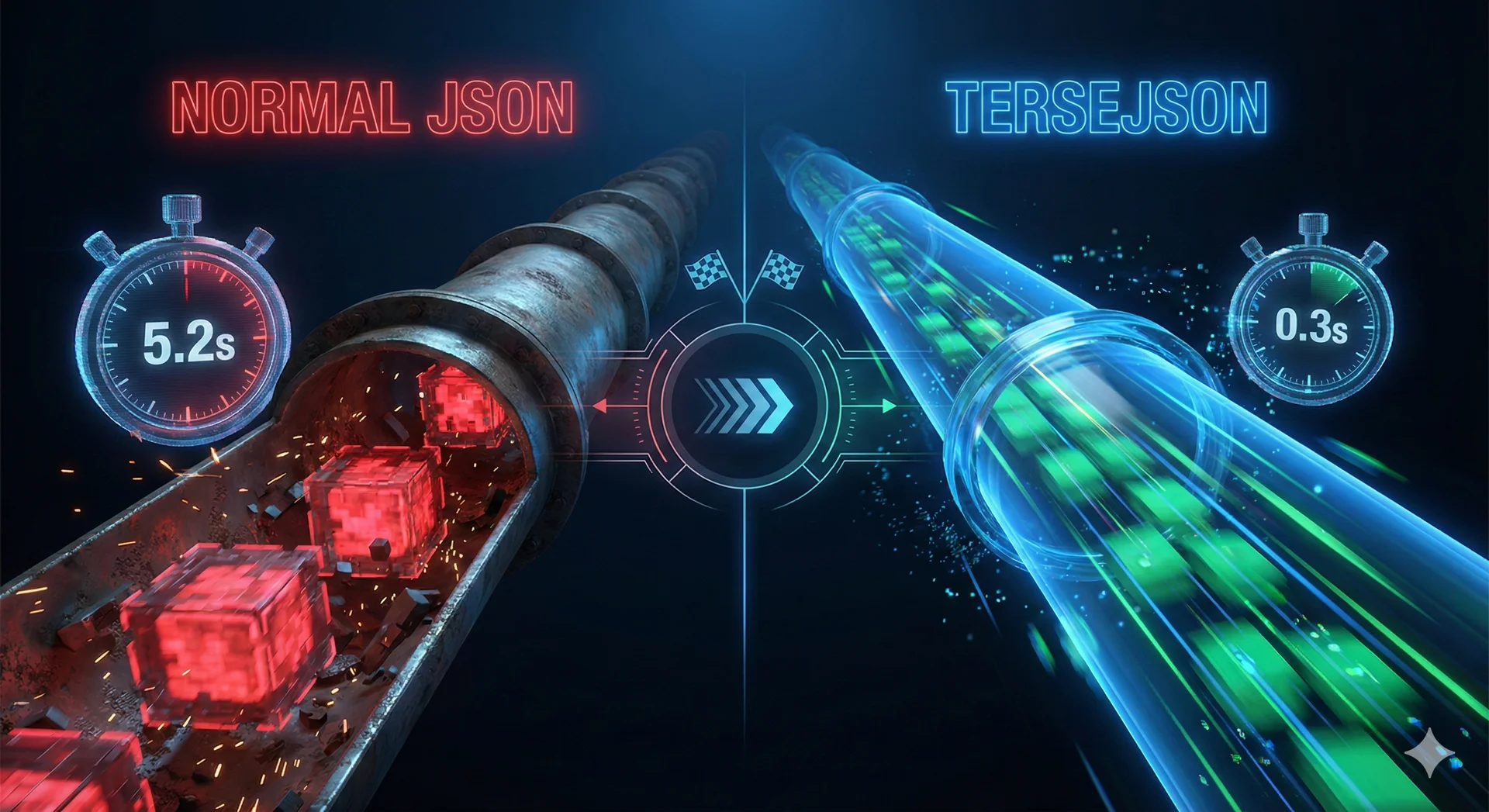

| Network | Normal JSON | TerseJSON | Time Saved |

|---|---|---|---|

| 4G (20 Mbps) | 200ms | 30ms | 170ms (85%) |

| 3G (2 Mbps) | 2,000ms | 300ms | 1,700ms (85%) |

| Slow 3G (400 Kbps) | 10,000ms | 1,500ms | 8,500ms (85%) |

User perception: 10 seconds = "Is this broken?" → User leaves. 1.5 seconds = "That was quick!" → User stays.Every 100ms of latency costs 1% in conversions (Amazon/Google studies)

7Enterprise Cost Savings

At enterprise scale, memory + bandwidth savings compound. Less memory per request = more requests per server. Smaller payloads = lower egress costs.

| Scale | Daily Traffic | Monthly Bandwidth Saved | Cost Reduction* |

|---|---|---|---|

| Startup | 1M requests | 9.3 GB | $0.84 |

| Growth | 10M requests | 93 GB | $8.37 |

| Scale | 100M requests | 930 GB | $83.70 |

| Enterprise | 1B requests | 9.3 TB | $837.00 |

*At $0.09/GB (AWS CloudFront pricing). Memory savings translate to reduced server instances.

8Integration: 5 Minutes to Memory Savings

Server Setup (2 lines)

import { terse } from 'tersejson/express';

app.use(terse());Client Setup (1 line change)

// Before

const data = await fetch('/api/users').then(r => r.json());

// After

import { createFetch } from 'tersejson/client';

const terseFetch = createFetch();

const data = await terseFetch('/api/users').then(r => r.json());

// data works exactly the same — Proxy handles expansion transparently

9Run the Benchmarks Yourself

Don't take our word for it. Run the benchmarks on your own machine:

# Clone the repo git clone https://github.com/timclausendev-web/tersejson cd tersejson/demo # Memory benchmark (requires --expose-gc) node --expose-gc memory-analysis.js # CPU benchmark node cpu-benchmark.js

10Summary: Memory-First JSON Processing

"TerseJSON: 70% less memory, 86% fewer allocations"

Primary: Memory Efficiency

Secondary: Network Savings

Ready for memory-efficient JSON?

Get started with TerseJSON in under 5 minutes.